For significant celebrations - milestone birthdays, anniversaries - we create family videos. Every time we get more ambitious: more people to include, less time and better video editing.

An email is sent asking for video wishes from extended family and friends. Multiple deadlines are set and missed each time. Someone important is always asking for another day. It is a frantic finish, right upto the moment we need to play it.

The tech savvy cousins/nephews-neices are selected for this task. I was recently asked to help with my cousin’s wedding video. Due to COVID, our very large family could not be present. I was keen to discover a better way given the frequency and time consuming nature of such projects.

As I thought about it, I listed the following as requirements:

- Place the videos in a template specifying borders and backgrounds, images/icons, text (name of the relative), etc

- Define the sequence of the clips

- Quickly trigger a new version as new clips come in or any edits to the above two

- And most importantly, not to run on my laptop. I don’t have the space to copy the files to my local machine. And my CPU fan is always running on max speed without any such CPU intensive tasks.

A large number of people were responsible for collecting videos from different groups of relatives and friends. We collected the videos in a shared Google Drive folder. This reduced a lot of the friction. We trimmed the videos individually to reduce them to their most interesting parts. Luckily for me I didn’t have to watch the videos multiple times. We got the timestamps from my Aunt & Uncle, and my cousin editted the clips in iMovie. Automating this grunt work is a much harder project in itself.

Next we created a sequence file that provided parameters for the template. Columns - file name, caption to be displayed, overlay image 1, background colour, overlay image 2.

Beginning Video with Music.mp4

Mom \& Dad.mp4

Relative1.mov Name 1 mandala2.png #032B60 SN2s.jpg

Relative2.MOV Name 2 mandala2.png #8F3A6F SN14s.jpg

Relative3.mov Name 3 mandala1.png #70094A SN14s.jpg

Relative4.mov Name 4 mandala2.png #3750A8 SN13s.jpg

Relative5.mp4 Name 5 mandala3.png #A67761 SN1s.jpg

Relative6.mp4 Name 6 mandala3.png #A67761 SN1s.jpg

Ending Video with Music.mp4

Next we define the template. We extract parameters from the sequence file and generate a command using ffmpeg, a multimedia tool. The crux here is to generate a video filter (image overlays, background, text, sound normalization) and applys it to each video. The generated files are stored in order as 1.ts, 2.ts, etc

ffmpeg -hide_banner -i "$arg1" -t 10 -i $arg3 -i $arg5 \

-filter_complex "[0:v]scale=1600:900:force_original_aspect_ratio=decrease,pad=1920:1080:320:0:color=$arg4,setdar=16/9,setsar=1/1,fps=fps=30,format=yuv420p[V1]; \

[V1][1:v]overlay=(overlay_w/2)*-1+40:main_h-(overlay_h/2)-40[V2]; \

[V2][2:v]overlay=0:450-(overlay_h/2)[V3]; \

[V3]drawtext=fontfile=./Sanchez-Regular.ttf: text='$arg2': fontcolor=white: fontsize=64: x=(w-text_w)/2: y=(h-90-(text_h/2)); \

[0:a]loudnorm=i=-24:tp=-2:lra=7" \

-c:a aac -c:v libx264 -crf 23 \

"$i.ts"

And now all that needs to be done is concatenate the generated videos (1.ts, 2.ts, etc) into one file and publish it back to Google Drive where every one can see it.

Createing an API trigger to specify the config for this media job, makes this quite easy to kick off. Potentially anyone can do it.

curl --location --request POST 'https://XXXXXXXX.execute-api.ap-south-1.amazonaws.com/test/video-concat/' \

--header 'Content-Type: application/json' \

--data-raw '{

"input_folder": "XXXXXXXXXXTgtj9wZG3HIcb6b1dLLqg",

"output_folder": "XXXXXXXXXjjLvduXwQM4j5lPHY7_6z6a",

"sequence_file_name": "sequence",

"template": "template",

"output_file_prefix": "Short-Video-"

}'

I think this was a very simple and elegant solution that removed the grunt work and gave us the flexibility to iterate the videos until the last minute, without much stress.

Under the hood

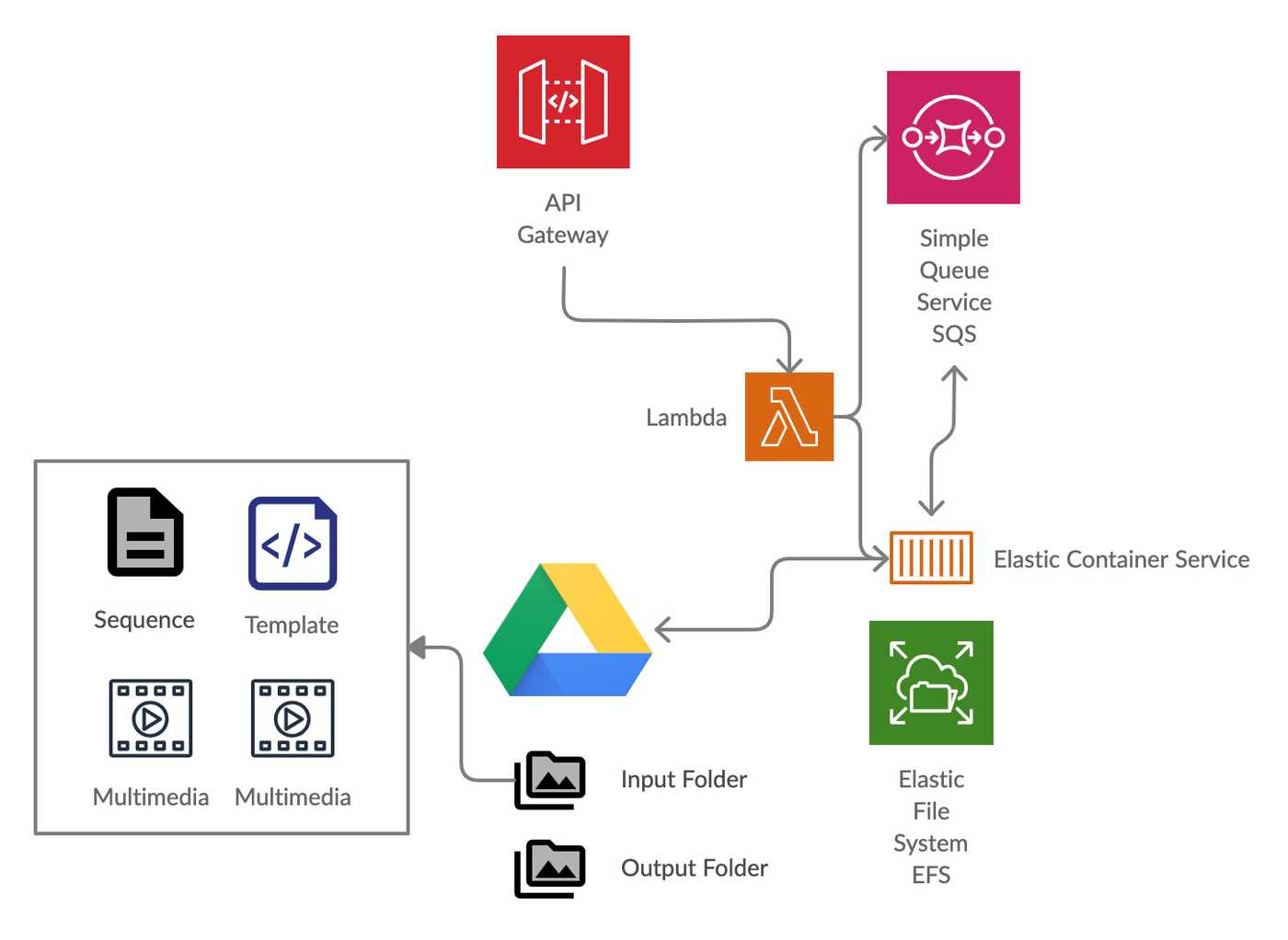

“A design is only as elegant as it is on the inside”. I am pretty proud of this simple and effective design. It is built on AWS ECS with Fargate that is ideal for this type of a burst job and is cost effective. We create the architecture using Terraform. Terraform makes it easy to bring up the infrastructure and destroy it when not in use. It is perfect for infrequent use like family events. We use Docker to provide an image for ECS.

The flow of the request:

- The user’s

POSTrequest is passed on to a Lambda Function via API Gateway - The Lambda Function posts an event to SQS Queue and updates the ECS service’s desired count to 1

- The ECS container reads from the queue in a while loop, until there are no messages.

- The ECS container downloads all the files in the

inputfolder on to EFS. It avoids downloading duplicates. It expects the sequence and template file to be present here. - It applys the template’s

ffmpegcommand on each video in the sequence. - It uses

ffmpegto concatenate the videos using ffmpeg filters - It then pushes the final video to the

outputfolder on Google Drive. - It then deletes the message from the queue, and checks for another message

I was using CPUs instead GPUs, which makes this slower. At the moment, to use GPUs with ECS, you need to have an on-demand instance. This would have compromised the serverless architecture and robbed me of the thrill of 1-click to generate the video. Managed services like AWS Elemental MediaConvert don’t provide raw access to ffmpeg and therefore we can’t create the templates.

You can find code here