The lockdown opened up the opportunity to get a coach from anywhere in the world. It came with its advantages and disadvantages. While you get the best possible training sessions designed, how do you get your technique reviewed? I was looking for a simple video tool that helped me create a short film for my coach to review.

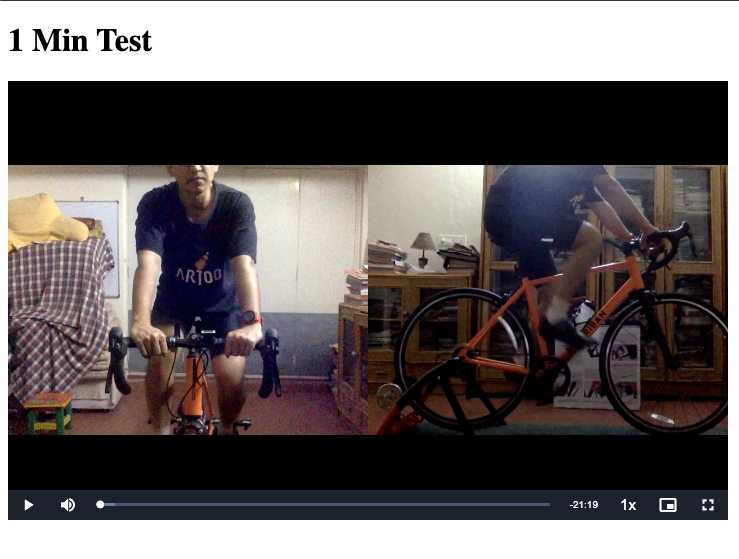

I just spent some time on video editing and playing with ffmpeg. With two laptops I was able to record myself cycling from two different angles. And then with hstack video filter of ffmpeg, I was able to stack the videos horizontally. I aligned the videos based on the timestamps. Using AWS Elemental MediaConvert service, I created HTTP Live Streaming (HLS) format file. And finally, using VideoJS for the player, I hosted it as a simple HTML page on S3 to share with my coach. The result:

It turned out the height of the saddle was incorrect. I was quite thrilled with the value of feedback that I received for the relative simple effort.

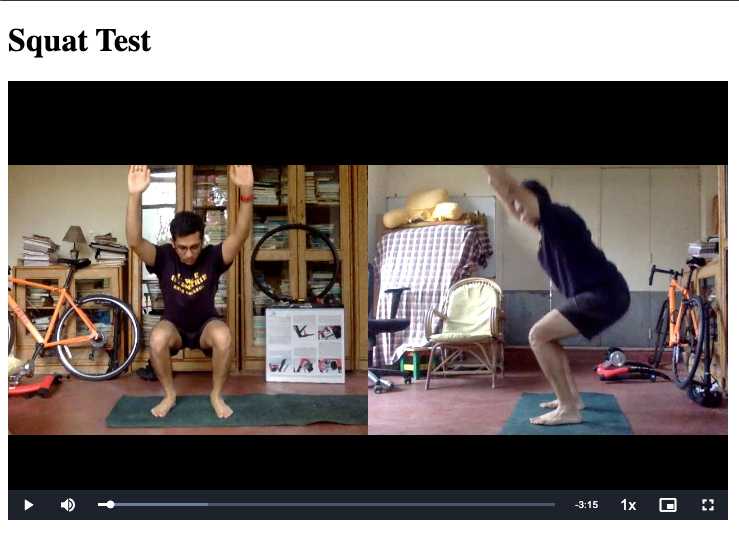

I then applied it to some simple tests that my coach wanted to see to get a sense of flexibilty and form. I clicked transition on my watch as I changed my exercises. Later I used the timestamps from Garmin file to trim the video and take only the interesting segments. Again, I got some very valuable feedback about the tightness in my calves and a specific Strength and Conditioning plan from my coach.

But how do you apply this approach for running? You go in and out of the field of vision of the cameras. It will get quite boring for the coach to have to wait for the few seconds you are on the screen every lap. You cannot trim the clips based on time because your pace is not constant.

I placed the laptops with their back to each other on a stool on my running track. I tried to cover the longest straight portion I could find of the track.

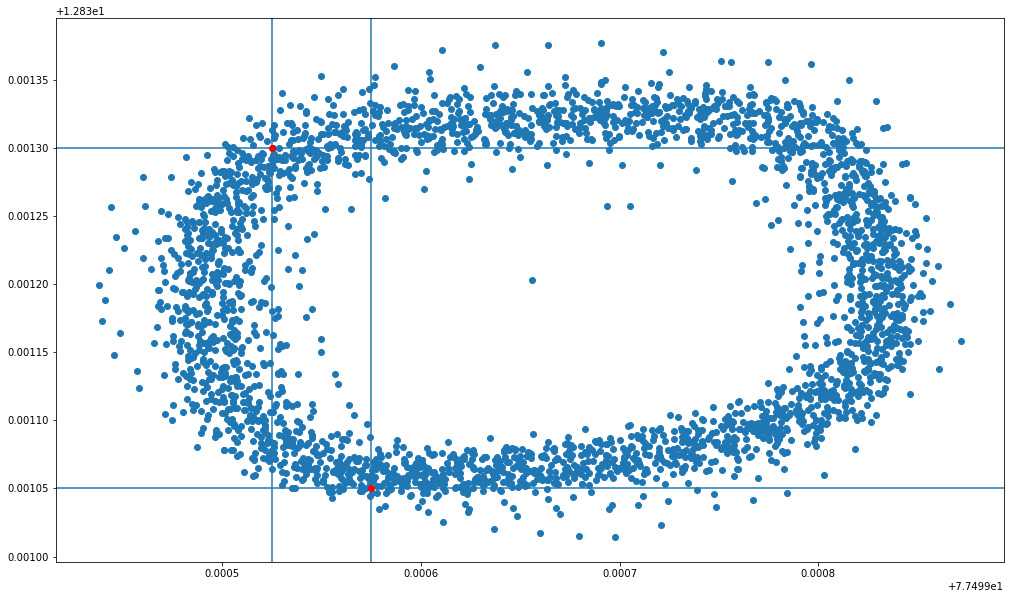

I plot the GPS coordinates of my run. Through trial and error I was able to find the two points that represented the field of vision of the cameras. The mental impression of the track is different from the actual North-South orientation that you see in the plot.

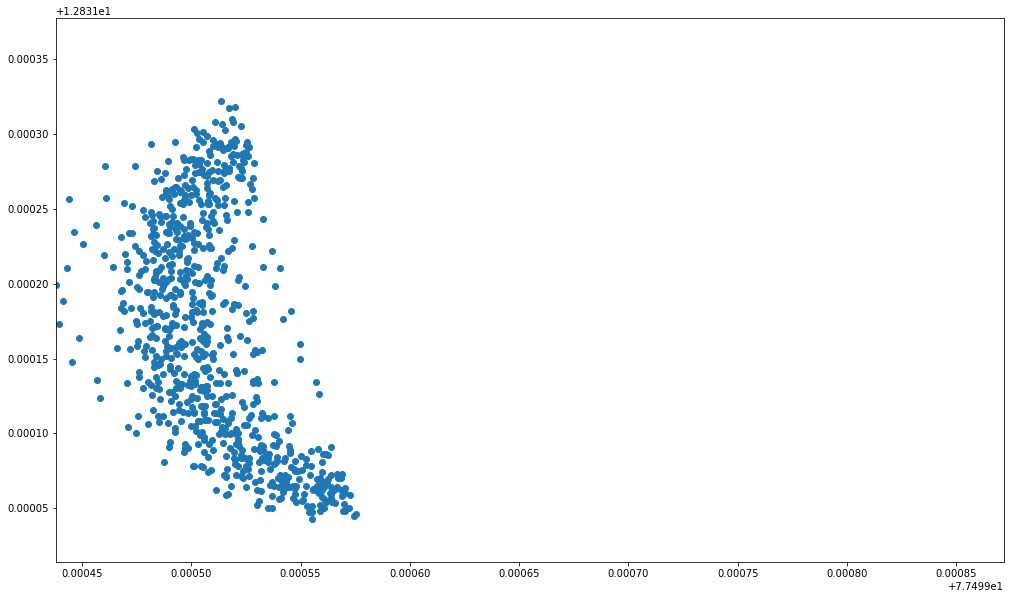

Drawing a simple line through these points, and taking the points that fall on the side of the line, you get the points that are of interest.

Using this equation you can get the required timestamps to trim the video to only the interesting segements (where you are on the screen):

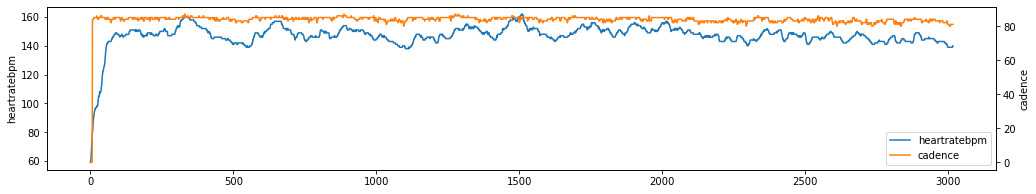

I realized that without having key metrics (heart rate, cadence etc) you lose context of where you are in the run. I plotted these key metrics and made that into a video file.

Finally, I stacked the 3 video feeds together (front, back and metrics).

I have uploaded the code here.